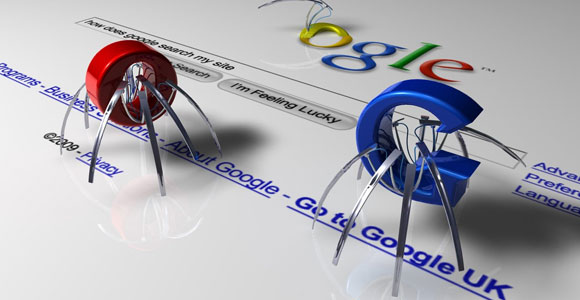

A website crawler, also known as a web spider or web scraper, is a software program that systematically browses the internet, visiting web pages and collecting data from them. It starts from a given URL and follows links to discover and index web pages, typically for the purpose of gathering information or monitoring changes on websites.

Benefits of using a website crawler include:

Content monitoring: Website crawlers can regularly scan websites and monitor changes in content, such as new pages, updated information, or deleted content. This is valuable for staying up to date with competitors, tracking industry trends, or ensuring your own website is functioning correctly.

SEO analysis: Crawlers can analyze various aspects of a website for search engine optimization (SEO) purposes. They can identify broken links, analyze metadata and header tags, assess page load speed, and provide insights to improve search engine rankings.

Data extraction: Website crawlers can extract specific data from web pages, such as product information, pricing details, or user reviews. This is useful for competitive research, market analysis, or gathering data for business intelligence purposes.

Link analysis: Crawlers can identify internal and external links on a website, helping to analyze site structure, identify broken links, and improve overall navigation and user experience.

When choosing a website crawler, consider the following factors:

Features and functionality: Determine what specific features and functionalities are important for your needs, such as content monitoring, SEO analysis, data extraction capabilities, scheduling options, and reporting capabilities.

Scalability and performance: Consider the size and complexity of the websites you intend to crawl. Ensure that the crawler you choose can handle large-scale crawling efficiently and provide fast and accurate results.

Customization options: Look for a crawler that allows customization and configuration to suit your specific requirements. This includes setting crawl depth, specifying crawling rules, and defining the data to be extracted.

User interface and ease of use: Evaluate the user interface and ease of use of the crawler. A user-friendly interface can simplify the crawling process and make it easier to navigate and interpret the collected data.

Support and documentation: Check for available support channels (e.g., email, chat, forums) and the availability of documentation and tutorials to assist you in using the crawler effectively.

Cost and pricing model: Consider the cost and pricing structure of the crawler, including any subscription fees, usage limits, or additional charges for advanced features.

Things to look out for in a website crawler include:

Compliance with website policies: Ensure that the crawler you choose respects website policies and robots.txt rules to avoid any legal or ethical issues.

Crawl speed and resource usage: Some crawlers may consume a significant amount of resources (e.g., bandwidth, CPU) during the crawling process. Ensure that the crawler's behavior aligns with your website's capabilities and hosting environment.

Proxies and IP rotation: If you need to crawl websites that impose limitations or restrictions, consider a crawler that supports proxies or IP rotation to prevent IP blocking or rate limiting.

Data privacy and security: If the website you are crawling contains sensitive or personal data, choose a crawler that prioritizes data privacy and provides secure data handling mechanisms.

By considering these factors and being aware of potential pitfalls, you can choose a website crawler that best fits your specific requirements and maximizes the benefits it provides.